In this blog i am trying to explain you how to load bulk data into PostgreSQL more faster than copy command .

here i am using "pg_bulkload" binary/utility for loading data into PostgreSQL .I am covering installation/configuration of pg_bulkload and loading data .

Let's start from scratch .

Introduction : -

I assumes the following:

I have created one table to get data loaded into that table .

TYPE = CSV | BINARY/FIXED | FUNCTION

The type of input data. The default is CSV.

here i am using "pg_bulkload" binary/utility for loading data into PostgreSQL .I am covering installation/configuration of pg_bulkload and loading data .

Let's start from scratch .

Introduction : -

pg_bulkload is designed to load huge amount of data to a database. You can choose whether database constraints are checked and how many errors are ignored during the loading. For example, you can skip integrity checks for performance when you copy data from another database to PostgreSQL. On the other hand, you can enable constraint checks when loading unclean data.

The original goal of pg_bulkload was an faster alternative of COPY command in PostgreSQL, but version 3.0 or later has some ETL features like input data validation and data transformation with filter functions.

I assumes the following:

- PostgreSQL must have been installed .

- The database has been initialized using initdb.

Installation : -

Download source code from below link

wget https://github.com/ossc-db/pg_bulkload/archive/master.zip

unzip master

|

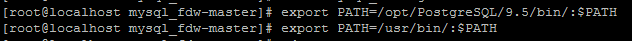

If environment variable is not set then ,Manually export path of pg_config

export PATH=/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/root/bin:/usr

/local/postgresql9.6/bin/

|

cd pg_bulkload-master

make

make install

|

After Installation ,You can run regression testing

make installcheck

|

Configuration : -

First connect to the DB and create pg_bulkload extension .

| create extension pg_bulkload ; |

Test case : -

I have created one table to get data loaded into that table .

- Copy data into table via copy command .

Time taken : - 14 seconds

- Load data via bulk-load utility .

Edit control file that includes settings for data loading

Control file can accept below Input file types

- CSV : load from a text file in CSV format

- BINARY | FIXED : load from a fixed binary file

- FUNCTION : load from a result set from a function.

If you use it, INPUT must be an expression to call a function.

Basic configuration/settings to load data from csv file .

#File Name = /tmp/one_csv.ctl

OUTPUT = public.bulkload_demo # [<schema_name>.]table_name

INPUT = /tmp/bulkload_demo.csv # Input data location (absolute path)

TYPE = CSV # Input file type

QUOTE = "\"" # Quoting character

ESCAPE = \ # Escape character for Quoting

DELIMITER = "," # Delimiter

MULTI_PROCESS = yes

|

Start loading data by using below command .

pg_bulkload /tmp/one_csv.ctl -d bulkload_test

|

Time taken : - 4 Seconds

Reference/Useful Links : -

Detailed information on other switches of pg_bulkload .

Pre-defined test case .